A fundamental question in machine learning is to determine what a model can and cannot learn. The graphs come handy whenever we deal with relations between the objects. The main sources of graphs in ML: 1) graphs coming from networks, e.g., social, biological, technology and 2) graphs coming from flat (often vision) data, where a graph serves as a useful nonparametric basis and is an effective data representation for such tasks as spectral clustering, manifold, or semi-supervised learning. Other popular things to use for the graph are skills search inside the company, or decision-making on graphs, suitable for recommender systems, or online advertising. Most GML models aim to encode a graph structure (e.g., node, edge, subgraph, or the entire graph) as a low dimensional embedding, which is used as the input of the downstream machine learning tasks, in an end-to-end or decoupled manner. The proposed AGL mainly focuses on GNNs, which is a category of GML models widely used. Each layer of GNNs generates the intermediate embedding by aggregating the information of the target node’s in-edge neighbors.

Graphs are a ubiquitous data structure and employed extensively within computer science and related fields. A lot of real-world applications can be readily modeled as graphs such as recommender systems, social networks, single molecular, and protein interactions networks. Graphs are not only useful as structured knowledge repositories: they also play a very important role in modern machine learning. This course will cover both conventional algorithms and the most recent research on the analysis of graphs from a machine learning perspective. The topics that we will cover include basic graph algorithms such as graph clustering, summarization, anomaly detection, and more advanced research topics such as network embedding, graphical neural networks and deep reinforcement learning on graphs.

Representation learning approaches for machine learning on graphs offer a powerful alternative to traditional feature engineering. In recent years, these approaches have consistently pushed the state of the art on tasks such as node classification and link prediction. However, much work remains to be done, both in improving the performance of these methods and—perhaps more importantly—in developing consistent theoretical frameworks that future innovations can build upon.

Methods

- Graph neural networks on node-level, graph-level embedding

- Graph neural networks on graph matching

- Scalable methods for large graphs

- Dynamic/incremental graph-embedding

- Learning representation on heterogeneous networks, knowledge graphs

- Deep generative models for graph generation/semantic-preserving transformation

- Graph2seq, graph2tree, and graph2graph models

- Deep reinforcement learning on graphs

- Adversarial machine learning on graphs

- Spatial and temporal graph prediction and generation

- Graph mining

- Probabilistic and graphical models for structured data

- Heterogeneous/multi-model graph analysis

- Network embedding models

- Statistical models of the graph structure

- Combinatorial graph methods

- Semi-supervised learning, active learning, transductive inference, and transfer learning in the context of graph

One of the recurring criticisms about the current state of Artificial Intelligence (AI) is the deficiency caused by the lack of background knowledge. A knowledge graph, where entities are represented as nodes and relations among entities are represented as directional edges that can significantly close such a gap. Building and maintaining a large-scale, accurate, and fresh knowledge graph, however, is a significant endeavor.

ACL (Association for Computational Linguistics) is the top conference in NLP. Each year there are more and more papers that use graphs with natural language. ACL 2020 stats Dates: July 5-10 Where: Online

- 3088 submissions (2906 submissions in 2019)

- 571/208 long and short papers (25% overall acceptance rate; 447/213 in 2019)

- 42/6 long/short graph papers (7%/3% of total)

Computed some stats about graph papers in ICLR 2020. There are a few interesting things.

(1) Every third paper on graphs is accepted, clear indication GML is becoming popular;

(2) On average it's needed [6,6,8] to get accepted, [6,6,6] would be borderline.

(3) AC can sometimes save a paper, even if got low scores. This is rather good, meaning that reviewers are not the only ones who decide.

(4) Likewise, AC can reject a paper, even if it is unanimous accept by the reviewers. I think that happens mostly because the paper does not present enough experimental comparison to SOTA.

The top papers got all accept, however, the distribution of which type of accepting it is (poster, spotlight, or talk) is not well correlated with the average rating. As such the top papers took only strong accepts (equivalently the average rating of 8) while having only poster or spotlight decision. In turn, the unique paper in GML that obtained an opportunity for a long presentation, “GraphZoom: A Multi-level Spectral Approach for Accurate and Scalable Graph Embedding”, has one weak accept and two accepts — a score that is shared by 7 other papers.

From the ontological systems required to map the world’s knowledge, to the information extraction systems needed to collect accurate and high-coverage facts from both structured and unstructured sources, and from the quality assurance process necessary for weeding out errors and inconsistencies, to the frequent updates demanded by a fresh knowledge graph, the tasks present numerous challenges and still constitute many open research areas. Learning useful representations from highly structured objects such as graphs are useful for a variety of machine learning applications. Besides reducing the engineering effort, these representations can lead to greater predictive power.

Applications:

- Learning and reasoning (machine reasoning, inductive logic programming, theory proving)

- Natural language processing (information extraction, semantic parsing (AMR, SQL), text generation, machine comprehension)

- Bioinformatics (drug discovery, protein generation, protein structure prediction)

- Program synthesis and analysis

- Automated planning

- Reinforcement learning (multi-agent learning, compositional imitation learning)

- Financial security (Anti-Money Laundering

- Computer vision (object relation reasoning, graph-based representations for segmentation/tracking)

- Analysis of social media

- Analysis of biological networks

- Knowledge graph construction

- Large-scale analysis and modeling

So how do we work with the Graph Machine Learning? What will be the first step?

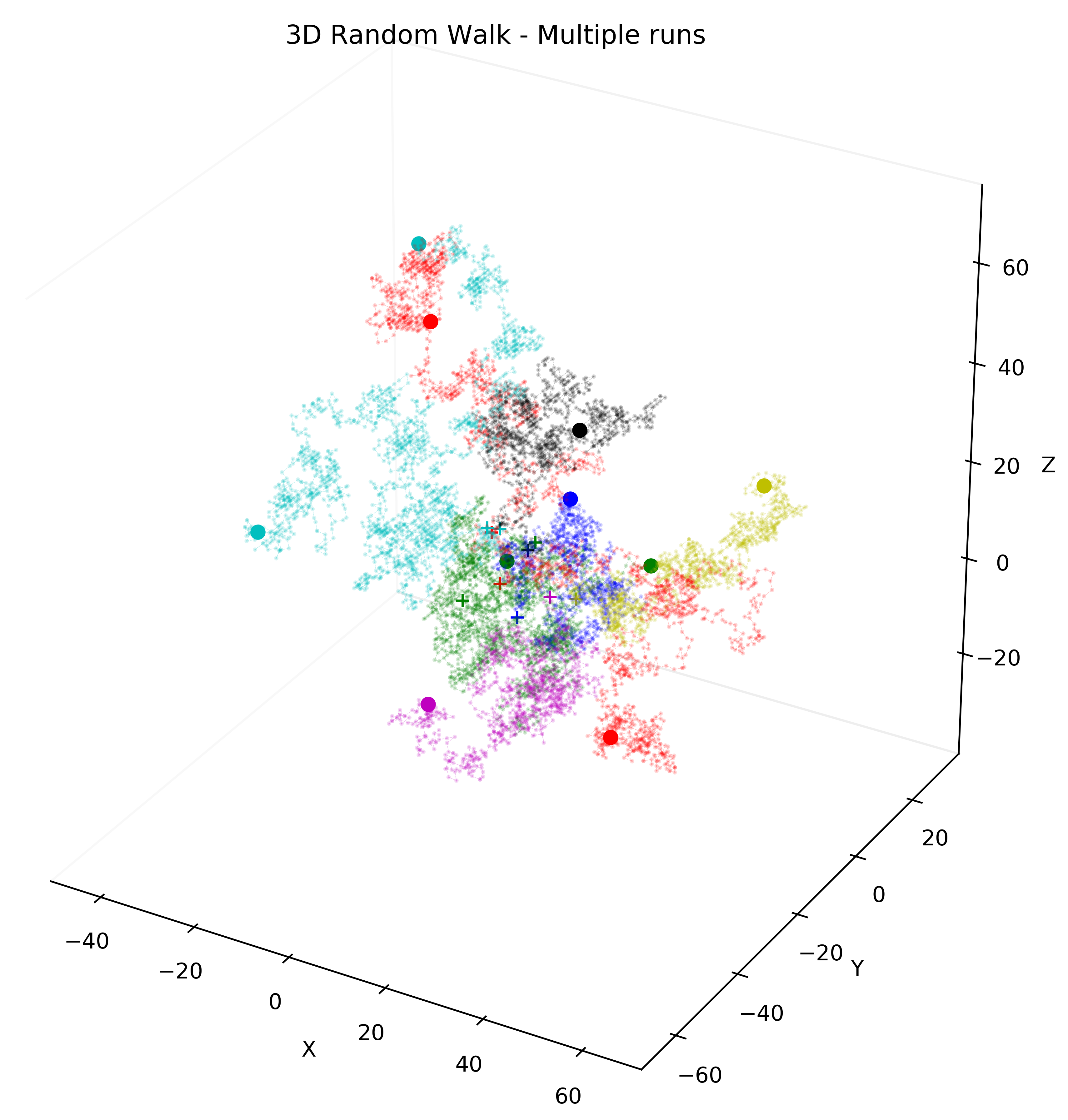

The central problem in machine learning on graphs is finding a way to incorporate information about the structure of the graph into the machine learning model. In node2vec, system could learn a mapping of nodes to a low-dimensional space of features that maximizes the likelihood of preserving network neighborhoods of nodes. And it defines a flexible notion of a node’s network neighborhood and designs a biased random walk procedure. The algorithm follows two principles:

- ability to learn representations that embed nodes from the same network community closely together

- to learn representations where nodes that share similar roles have similar embeddings.

The key contribution is in defining a flexible notion of a node’s network neighborhood by developing a family of biased random walks, which efficiently explore diverse neighborhoods of a given node.

node2vec, DeepWalk are both using the "Random Walk" or – pathfinding algorithm. If you want to appreciate the beauty of mathematics, appreciate one of the finest inventions of the human brain (calculus) and then you must read this book. More than the theory of relativity, Albert Einstein should be credited for connecting statistics with physics/science by explaining “The Drunkard’s walk” or sobered scientific phrase “The random walk”. Human wants to remain independent and in control of random events – at least have the illusion of control. That cognitive bias is well demonstrated (behavioral finance) - while managing money (individuals/institutions) and it defies modern portfolio theory – and hence now is the time for integrating behavioral finance with modern portfolio theory. It has arrived and you should not be surprised when trading houses have started taking advantage of it (already happening!) Well, it is worth investing $10 and read this book. It will certainly challenge your intellectual underpinning and provide a different perspective.

The best review for this book is written by all-time great Mathematician Stephen Hawking. You may ignore less mortal like me but should listen to Stephen Hawking: “In The Drunkard's Walk Leonard Mlodinow provides readers with a wonderfully readable guide to how the mathematical laws of randomness affect our lives. With insight he shows how the hallmarks of chance are apparent in the course of events all around us. The understanding of randomness has brought about profound changes in the way we view our surroundings, and our universe. I am pleased that Leonard has skillfully explained this important branch of mathematics. --Stephen Hawking ”

The node2vec framework learns low-dimensional representations for nodes in a graph by optimizing a neighborhood preserving objective. The objective is flexible, and the algorithm accommodates for various definitions of network neighborhoods by simulating biased random walks. Specifically, it provides a way of balancing the exploration-exploitation tradeoff that in turn leads to representations obeying a spectrum of equivalences from homophily to structural equivalence.

An embedding is a mapping of a discrete — categorical — variable to a vector of continuous numbers. In the context of neural networks, embeddings are low-dimensional, learned continuously vector representations of discrete variables. A word that every data scientist has heard by now, but mostly in the context of NLP. So why do we even bother embedding stuff?

As I see it, creating quality embeddings and feeding it into models, is the exact opposite of the famous say “Garbage in, garbage out”. When you feed low-quality data into your models, you put an entire load of learning on your model, as it will have to learn all the necessary conclusions that could be derived from the data. On the contrary, when you use quality embeddings, you already put some knowledge in your data and thus make the task of learning the problem easier for your models. Another point to think about is information vs domain knowledge. For example, let us consider word embeddings (word2vec) and a bag of word representations. While both can have the entire information about which words are in a sentence, word embeddings also include domain knowledge like the relationship between words and such.

For folks who have no idea what word2vec means: Word2vec is a two-layer neural net that processes text by “vectorizing” words. Its input is a text corpus and its output is a set of vectors: feature vectors that represent words in that corpus. While Word2vec is not a deep neural network, it turns text into a numerical form that deep neural networks can understand. Word2vec was created and published in 2013 by a team of researchers led by Tomas Mikolov at Google and patented. The algorithm has been subsequently analyzed and explained by other researchers. Embedding vectors created using the Word2vec the algorithm has some advantages compared to earlier algorithms such as latent semantic analysis.

The common technique to convert nodes to domain relationships called node2vec which aims to create embeddings for nodes in a graph (in the G (V, E, W) sense of the word). The most natural way I can think about explaining node2vec is to explain how node2vec generates a “corpus” — and if we understand word2vec we already know how to embed a corpus. So how do we generate this corpus from a graph? That’s exactly the innovative part of node2vec and it does so in an intelligent way that is done using the sampling strategy.

For example Python3 implementation of the node2vec algorithm Aditya Grover, Jure Leskovec, and Vid Kocijan. node2vec: Scalable Feature Learning for Networks. A. Grover, J. Leskovec. ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), 2016.

import networkx as nx

from node2vec import Node2Ve

# Create a graph

graph = nx.fast_gnp_random_graph(n=100, p=0.5)

# Precompute probabilities and generate walks

node2vec = Node2Vec(graph, dimensions=64, walk_length=30, num_walks=200, workers=4)

# Embed nodes

model = node2vec.fit(window=10, min_count=1, batch_words=4)

# Any keywords acceptable by gensim.Word2Vec can be passed, `dimensions` and `workers` are automatically passed (from the Node2Vec constructor)

# Look for most similar nodes

model.wv.most_similar('2')

# Output node names are always strings

# Save embeddings for later use

model.wv.save_word2vec_format(EMBEDDING_FILENAME)

# Save model for later use

model.save(EMBEDDING_MODEL_FILENAME)

In recent years, there has been a growing interest from the digital humanities in knowledge graphs as data modeling paradigm. Prediction tasks over nodes and edges in networks require careful effort in engineering features used by learning algorithms. Recent research in the broader field of representation learning has led to significant progress in automating prediction by learning the features themselves.

However, present feature learning approaches are not expressive enough to capture the diversity of connectivity patterns observed in networks, so node2vec is one of the approaches that could help with such problems. In the next few months, I am planning to write a book about Graph Machine Learning (GML) for exchanging ideas and methods for mining and learning with graphs, developing new common understandings of the problems at hand, sharing of data sets where applicable, and leveraging existing knowledge from different disciplines.

Reference

Summary

Graph neural networks (GNNs) still face many challenges when they are used to model highly structured data that are time-evolving, multi-relational, and multi-modal, and the mapping between graphs and other highly structured data such as sequences, trees, and graphs. More importantly, new application domains for GNNs that emerge from real-world problems introduce significantly more challenges for GNNs.

In many scientific fields, many important problems can be best expressed with a complex structure, e.g., graph or manifold structure, such as social networks and drug discovery. On one hand, these graph-structured data can encode complicated pairwise relationships for learning more informative representations; On the other hand, the structural and semantic information in sequence data can be exploited to augment original sequence data by incorporating the domain-specific knowledge.

Deep Learning models are at the core of research in Artificial Intelligence nowadays. In recent years, deep learning on graphs has experienced a fast increase in research on these problems, especially for graph representation learning and graph generation. New neural network architectures on graph-structured data have achieved remarkable performance in some well-known domains such as social networks and bioinformatics. They have also infiltrated other fields of science, including computer vision, natural language processing, inductive logic programming, program synthesis, and analysis, automated planning, reinforcement learning, financial security, and adversarial machine learning. Most methods rely on training and storing a unique embedding for each individual node. Moreover, most evaluation setups assume that the attributes, embeddings, and edge lists of all nodes used for both training and testing can fit in main memory—an assumption that is at odds with the reality of most application domains, where graphs are massive, evolving, and often stored in a distributed fashion. Developing representation learning frameworks that are truly scalable to realistic production settings is necessary to prevent widening the disconnect between the academic research community and the application consumers of these approaches.

The goal of my new book is to share together knowledge researchers from academia, industry, and government, to create a forum for discussing recent advances in graph analysis. In doing so, we aim to better understand the overarching principles and the limitations of our current methods and to inspire research on new algorithms and techniques for mining and learning with graphs. Last but not least, I would like to introduce techniques that are used and could help with Graph Machine Learning in Corona COVID-19 vaccine and other solutions discovery. In such unprecedented times, the famous ACL conference will be the first time online from my home town Seattle this year.

Please let me know what do you think about such a book?

Key Concepts in Graph Machine Learning:

-

Graphs: In the context of GML, a graph is a set of objects (nodes) that are connected by relationships (edges). These can represent social networks, molecular structures, transportation networks, and more.

-

Nodes and Edges: Nodes represent entities (e.g., people, proteins), while edges represent the relationships or interactions between these entities (e.g., friendships, chemical bonds).

-

Graph Representation: Graphs can be represented in various forms such as adjacency matrices, edge lists, or adjacency lists, depending on the application and computational considerations.

-

Node Embeddings: This involves mapping nodes to a low-dimensional space while preserving the graph's topological structure. Node embeddings facilitate the application of machine learning algorithms by converting graph data into a format that these algorithms can process.

-

Graph Neural Networks (GNNs): A class of deep learning models designed specifically for graph data. GNNs aggregate information from a node’s neighbors to learn representations for each node and/or the entire graph.

Applications of Graph Machine Learning:

-

Social Network Analysis: Understanding community structures, influential users, and information spread within networks like Facebook or Twitter.

-

Recommendation Systems: Leveraging user-item interaction graphs to recommend products or content, as seen in platforms like Amazon and Netflix.

-

Fraud Detection: Identifying unusual patterns or connections in financial transaction networks to detect fraudulent activity.

-

Drug Discovery: Analyzing molecular structures as graphs to discover new drugs or predict the properties of compounds.

-

Traffic and Route Optimization: Using road networks to improve route planning and traffic management.

-

Knowledge Graphs: Enhancing search engines and virtual assistants by understanding relationships between different concepts and entities.

Challenges and Considerations:

-

Scalability: Processing large-scale graphs can be computationally intensive due to the complex relationships and the sheer size of the data.

-

Dynamic Graphs: Many real-world graphs are dynamic, meaning their structure changes over time. Modeling these changes presents additional challenges.

-

Heterogeneity: Graphs can contain various types of nodes and edges, making it difficult to apply uniform learning algorithms.

-

Interpretability: Understanding and interpreting the decisions made by GML models, especially GNNs, can be challenging but is crucial for certain applications like healthcare.

-

Data Quality and Availability: The effectiveness of GML models depends on the quality and completeness of the graph data.

Getting Started with Graph Machine Learning:

To start with GML, one should have a foundational understanding of machine learning and graph theory. Practical experience can be gained through platforms like TensorFlow Graphs, PyTorch Geometric, or DGL (Deep Graph Library), which provide tools and libraries specifically designed for graph data and GNNs. Engaging with community projects, tutorials, and research papers can also accelerate the learning process.

Graph Machine Learning represents a powerful approach to understand complex structures and relationships in data, and it continues to grow as more applications across different industries are explored.